SDG 9Industry, innovation and infrastructure

Will it make itself? Without human input? Yes, and maybe quite soon.

Overview

Automation and robotics are the main trends in contemporary industrial production. The use of neural networks, artificial intelligence, and virtual and augmented reality is growing more common. People clearly want everything to happen by itself – not only automatically but also autonomously – without the presence of humans.

What could the future look like? A completely autonomous factory reliably producing highly individualised products in ever-changing, but small batches, day and night, with long cycles and significantly lower costs than before – all that without the help of humans.

The fear that robots will take over a large number of jobs poses a risk in the future. Their deployment, however, also implies the potential to harmonise human work with technology. The challenge is wide open in this respect.

Solution and Key Innovations

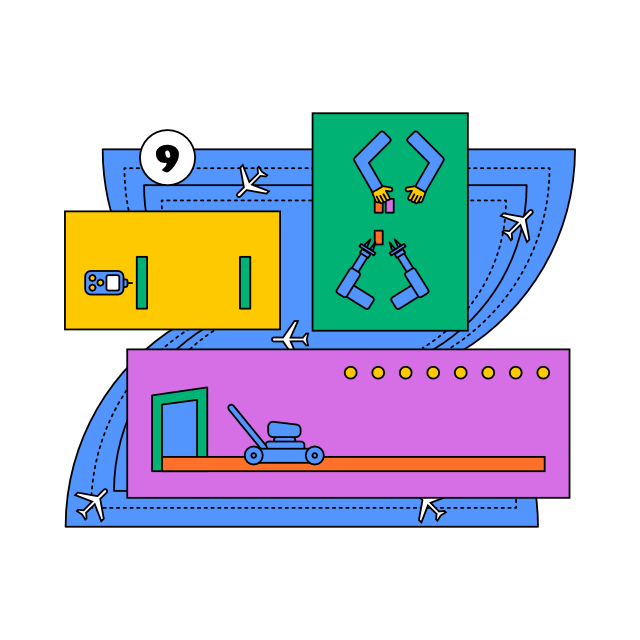

Scientists are continuously developing more easily configurable systems. The dream is versatile robots that can easily change their production programme and are completely flexible. Imagine that, for example, up to 3 p.m. on 20th November 2022, a factory will be making lawn mowers. As they are not very marketable in the winter, the factory will, within a very short timeframe, start making, say, hair dryers and shavers. The change will happen without human intervention. It is artificial intelligence that decides what the factory will produce and how everything related to it will change.

Autonomous robots are a unique challenge as they require a precise combination of mechanical, electrical and software engineering and a very advanced infrastructure. There are robots that can autonomously scan the environment, calculate data, perform a variety of tasks and, once they learn them, can repeat and adapt them to the production requirements. Yet, we have a long way to go to have production managed by artificial intelligence. This started with the development of cobots – collaborative robots – which can work with and learn directly from humans involved in production. The process has continued by extending the ability of robots to communicate with each other beyond the computer keyboard and the programmer’s mind.

A recent study by Italian researchers from the Department of Information Engineering, University of Pisa, Piaggio Research Centre, University of Pisa and Istituto di Linguistica Computazionale, CNR, Pisa, summarizes the experiences and trends of verbal communication in robotics based on a computational linguistic analysis performed on a database of 7,435 scientific publications over the past two decades.

The goal is for the human-robot communication to be natural, accurate, and efficient, allowing the robot to cooperate, be trained by untrained humans and behave effectively in a social environment.

There are, however, many issues that need to be resolved from the technological and scientific perspective. Robots still struggle to:

- correctly pick up sound from distant speakers,

- to deal with ambient noise,

- master interrupted speech and identifying the person who is speaking when more than one person is present.

The US company Comau’s MI.RA/Dexter developed a software which allows end users to program robots in real time using simple verbal commands such as “look”, “touch”, and “execute”.

At the moment, there are three common intuitive methods robot programming: the learning method, manual guidance and offline robot programming.

Comau’s MI.RA/Dexter uses a programming meta-language to take human syntax and convert it into robot syntax so that programming can be done using voice commands.

As robots are increasingly more autonomous and capable, humans need to understand their actions, intentions, capabilities, and limitations – thus the term “intelligible robots.” A robot verbally communicates what it is doing, what it is going to do and why. It is quite easy to ensure such one-sided communication.

An example of unique research in this area is the “Imitation learning of industrial robots using language” project implemented at the Czech Technical University in Prague at the Czech Institute of Informatics, Robotics and Cybernetics. Their software makes it possible to teach a robot to perform a given task by observing and imitating the movements of a human who demonstrates the activity and complements it with a verbal description. Both modalities (visual and linguistic) can support each other in case of uncertainty and missing information.

Localization systems, wireless communication, sensors, and lasers are a significant step on the way towards autonomous robots. Robots used in medicine – where lasers play a crucial role – are a separate chapter. Nanorobots and biorobotics, i.e., working in the “nano” and “submicron” worlds, promise a huge new perspective on the applicability of research results.

Key Questions

- Which countries in Europe and the world are the most advanced in robotics and automation?

- Is there a fully autonomous factory anywhere in the world?

- How are the Czechs doing?

Key Words

Robot, AI, artificial intelligence, AR, VR, cloud, blockchain, computer, simulation, autonomous